Late last year, I started exploring how to extract metadata from product drawings. Part numbers, material specifications, revision history, manufacturing process notes. The kind of information that lives in title blocks and needs to end up in a PLM database. I tried various OCR techniques - with the tolerance call outs, dimensions, it was a mess and I stretched the limit of what can be done with regular expressions. Then I found PaddleOCR-VL. It is Vision Language Model (VLM) with a few preprocessors finetuned for OCR tasks.

PaddleOCR-VL-0.9B integrates a NaViT-style dynamic resolution visual encoder with the ERNIE-4.5-0.3B language model. It uses a two-stage approach. First, PP-DocLayoutV2 performs layout analysis, localizing semantic regions and predicting reading order. Then PaddleOCR-VL-0.9B recognizes the content. A post-processing module outputs structured Markdown and JSON. On OmniDocBench v1.5, it achieves an overall score of 92.56, surpassing MinerU2.5-1.2B (90.67) and general VLMs like Qwen2.5-VL-72B. A model 80 times smaller achieving higher accuracy.

For my use case, I used a two-stage pipeline:

PDF → Images → PaddleOCR-VL (OCR) → Qwen3-0.6B (Extraction) → Structured JSON

The input is the entire drawing in pdf.

PaddleOCR-VL handles the OCR. Then I pass the extracted text to Qwen3-0.6B, a 600M parameter LLM, for structured information extraction. No complex regex patterns. The LLM figures out which text corresponds to which field.

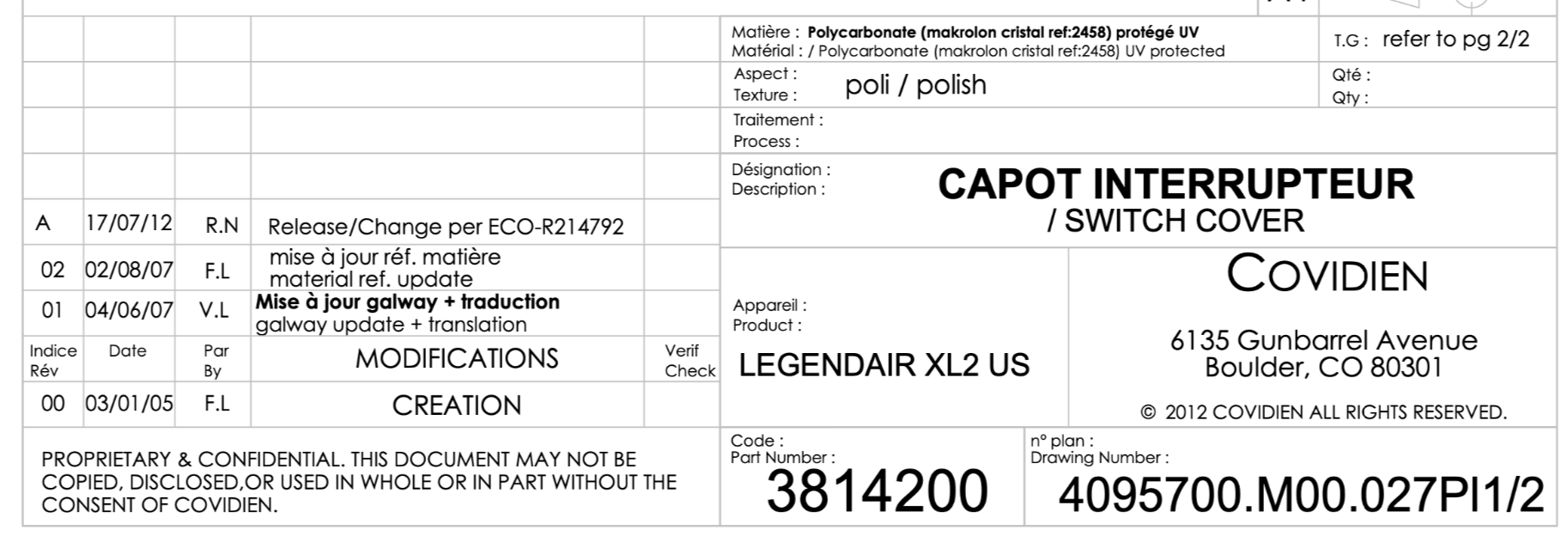

The output looks like this:

{

"part_number": "3814200",

"drawing_number": "4095700.M00.027PI1/2",

"material": "Polycarbonate (makrolon cristal ref:2458)",

"finish": "poli",

"description": "CAPOT INTERRUPTEUR / SWITCH COVER",

"product": "LEGENDAIR XL2 US"

}

The whole thing runs on a laptop with 16GB RAM. GPU helps but is not required. Even with mutliple waves of digital transformatio, product manufactures accumulated vast archives of engineering drawings that containe the recipe, part numbers, material specifications, supplier references, revision histories.Cloud-based OCR means documents leave your network, they might be logged or used for training - which could lead to IP Leaks.

VLMs for OCR is promising, 0.9B parameter model changes this. It runs locally on a computer without network access and the documents never leave your infrastructure. The Apache 2.0 license allows commercial use for free.

I have shared my extraction pipeline on GitHub: PaddleOCR_Engineering_Drawings.